Fly Smarter: How Modern Flight Controllers Enable Drone Autonomy

Keeping a drone in the air used to be the job of a steady pilot thumb. Today, it’s the work of silicon. Modern flight controllers (FCs) do a lot of the ‘hard-lifting’ for pilots, automating tedious manual tasks like stabilization, obstacle avoidance, and fully autonomous flying, thanks to advanced technologies.

What a Flight Controller Really Does?

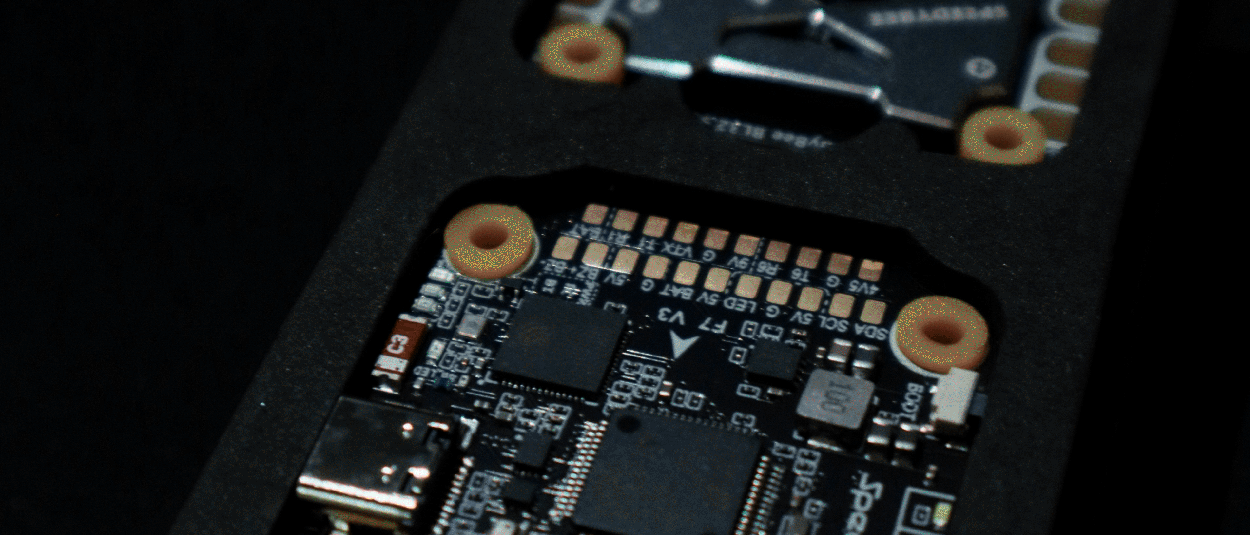

A flight controller is a circuit board, placed inside every drone, that processes drone movements and user inputs, using a combination of sensors and software. Its capabilities include:

- Sensor data operationalization from INS, IMU, and GPS (when available)

- Motor control, based on processed sensor data about flight conditions

- Altitude and orientation management, based on operator commands

- Automated sequence performance (e.g., landing, takeoff, return to home)

- Obstacle detection, interference management, and auto-flight stabilization

Without this constant background work, UAVs would drift, wobble, or crash when conditions get rough.

Autonomy Technology in Modern Flight Controllers

Autonomy comes from a stack of technologies working in concert inside the flight controller. Edge computing brings processing power onboard. Sensor fusion blends multiple data streams into a coherent flight picture. Machine learning adds adaptability in unpredictable conditions. Mesh networking connects drones into resilient, cooperative fleets. Together, these layers move UAVs from manual control to full autonomous capability.

Edge Computing

Modern flight controllers come pre-equipped with ARM-based system-on-chip (SoCs) like STM32H7 or NVIDIA Jetson Nano/Orin Nano. SoCs combine GPU, NPU, memory, and I/O interfaces, allowing the drone to run tasks locally instead of sending data back to the ground control station.

Benefits include:

- Low-latency control loops at >400 Hz for precise motor actuation

- Onboard CV algorithms for obstacle detection or target recognition without requiring uplink bandwidth

- Energy optimization algorithms running in real time to extend endurance. This makes the UAV less dependent on external processing and more resilient in GNSS/GPS-denied operations.

Sensor Fusion

SoCs also enable another cool feature in flight controllers: multi-sensor data integration. RAW IMU data (e.g., from accelerometer and gyroscope) is drift-prone. And magnometers are sensitive to interferences. To compensate for individual sensor deficiencies, you can deploy Extended or Unscented Kalman Filters (EKF/UKF) on the drone.

At Bavovna, we typically train custom hybrid INS navigation models on fused data from accelerometer, gyroscope, compass, barometer, and multi-vector airflow sensors to enable high-precision, autonomous flights in GPS-denied environments. But you can also layer LiDAR range data for extra corrections.

What you gain as a result is:

- Drift reduction, thanks to continuous cross-correction of IMU errors by cross-referencing with stable sensor inputs

- Noise cancellation as filters suppress random spikes or jitter from individual sensors

- Flight even precision in GPS-denied environments, based on INS data alone

- Smoother control loops and reduced oscillation, thanks to improved PID

- Greater situational awareness, along with real-time obstacle detection and terrain-relative navigation

Machine Learning

Machine learning operationalizes fused sensor data into real-time intel for smoother navigation. Pre-trained models can recognize patterns of drift, turbulence, or interference before they destabilize the UAV and auto-adjust controls for smoother cruising. Bavovna’s AI Hybrid INS model, for instance, achieved 99.99% accuracy in GNSS-denied flight by continuously adapting to changing dynamics.

Also, you can train ML models for other use cases, like:

- Predictive maintenance. Algorithms can parse flight logs for early signs of motor imbalance, sensor degradation, or structural vibration, enabling proactive repairs.

- Energy optimization. Pre-trained models can learn from power-efficient flight patterns and dynamically manage throttle to extend battery life without sacrificing stability.

- Signal integrity monitoring. ML can detect jamming/spoofing signatures in RF/GNSS signals and suggest switching to other frequencies or inertial-based navigation.

Mesh Networking

Mesh networking protocols allow drones to establish P2P communication links, instead of relying on a single ground control station or relay tower.

Each UAV acts as both a node and a router, dynamically forwarding data packets between neighbors. This creates a Mobile Ad Hoc Network (MANET) where connections are self-forming and self-healing. If one link is lost due to terrain, interference, or node failure, data automatically reroutes through the remaining drones. Flight controllers with integrated radios and networking protocols manage this routing in real time, ensuring continuous communication across the fleet and coordinated flying.

This enables a host of new operational scenarios for:

- ISR & tactical operations: Drone swarms can share live video and sensor feeds, even when only one has a direct uplink to the command center.

- Search & rescue missions: Mesh networking enables extended coverage across

the operating site, relaying data to rescuers despite terrain obstacles.

- Disaster response: RC UAVs can provide a temporary communication infrastructure for first responders in affected sites by acting as aerial relays.

- Industrial inspections: Coordinated drone swarms can scan large industrial assets in less time and share data without overwhelming a single control link.

Conclusion

Drone flight controllers have evolved from simple stabilizers to advanced gizmos for full autonomy. Thanks to edge computing, sensor fusion, ML, and mesh networking, you can fly your aircraft without second-guessing your commands or compromising flight stability.

Learn how Bavovna’s AI hybrid INS kit enables greater autonomy in drone navigation by combining sensor fusion data with pre-trained AI models.